Large Language Model Node

Basics

The Large Language Models node allows you to perform a wide range of text-based tasks using language models. This node is highly flexible and can be configured to generate responses, process user inputs, and provide intelligent text-based outputs for your workflows.

Inputs

Model: Select the language model you wish to use. Different models may have varying levels of capability and performance. Ensure you select the one best suited for your task. Fleak offers various models:

gpt-4o: This model aims to advance natural language understanding, generation, and adaptation capabilities. Expected innovations may include improvements in model architecture, training methodologies, and the ability to handle increasingly complex language tasks at scale.gpt-4o-mini: A scaled-down version of the gpt-4o model, focused on delivering efficient and high-quality natural language understanding and generation. It balances performance and computational efficiency, making it suitable for scenarios where resource constraints are a consideration.gpt-3.5-turbo: An enhanced version of GPT-3.5 designed to deliver faster inference with improved efficiency. This model retains the strong language understanding capabilities of its predecessors while optimizing speed, making it ideal for applications that require real-time interactions.llama3.1-70b-8192: An advanced language model with 70 billion parameters, optimized for deep understanding of complex text inputs and long-context reasoning. The 8192 context length allows it to manage extensive input sequences, supporting sophisticated applications like multi-turn conversations and detailed document summarization.llama3.1-8b-8192: A smaller variant of llama3.1 with 8 billion parameters, providing efficient natural language processing while maintaining a relatively long context length of 8192. This model strikes a balance between computational cost and handling longer input sequences, making it suitable for moderate-scale tasks.llama3-70b-8192: A previous version of llama with 70 billion parameters, offering robust natural language capabilities. With an 8192 token context window, it is designed to manage complex text and context, suitable for demanding applications in conversational AI and content generation.llama3-8b-8192: A version of llama3 with 8 billion parameters, focusing on delivering efficient language understanding for tasks that require a balance between capability and computational efficiency. The extended context length of 8192 supports the handling of longer sequences in various applications.mixtral-8x7b-32768: A powerful ensemble model consisting of eight 7-billion parameter models, capable of managing extremely long contexts up to 32768 tokens. Designed for use cases requiring detailed understanding and continuity over lengthy input sequences, mixtral-8x7b-32768 is suitable for applications such as large-scale document analysis and in-depth conversational AI.

Messages

The Messages section allows you to define a conversation between the system and the user. The conversation is structured by assigning different roles and prompts.

-

Role: Select the role of the message. It can be:

- System: This role represents the person interacting with the LLM. The user inputs queries, prompts, or instructions to the model. The user's messages are typically questions, requests for information, or commands that guide the conversation.

- User: This role involves messages that set the behavior or context of the conversation. System messages can provide instructions or constraints to the assistant, guiding how it should respond. For example, a system message might instruct the assistant to adopt a particular tone, style, or focus on specific topics. These messages are not seen by the user but influence the assistant's responses.

- Assistant: This role is the LLM itself responding to the user's inputs. The assistant generates answers, provides information, and follows the instructions provided by the user and the system. The assistant aims to fulfill the user's requests and maintain a coherent, contextually appropriate conversation.

-

Prompt: Enter the content of the message. This can be text that guides the model’s behavior (e.g., “You are a helpful assistant”) or the actual query or instruction for the model to act upon. The Input can be a question, instruction, or incomplete sentence. The model uses it to generate answers, create content, or give summaries. One can also Input specific words or events, used to provide a set response, like in customer support or automated systems.

-

Add Message: Click on this button to add more messages, allowing you to build complex conversations or multi-turn interactions.

Usage Tips

- Start by defining a System message to set the context for the language model, such as defining its personality or task scope.

- Use User messages to create a dialogue flow, simulating a user interacting with the model.

Considerations

- Selecting an appropriate model will impact the quality and speed of the response. More advanced models may require higher computational resources.

- Define clear System messages to ensure the model behaves as expected throughout the conversation.

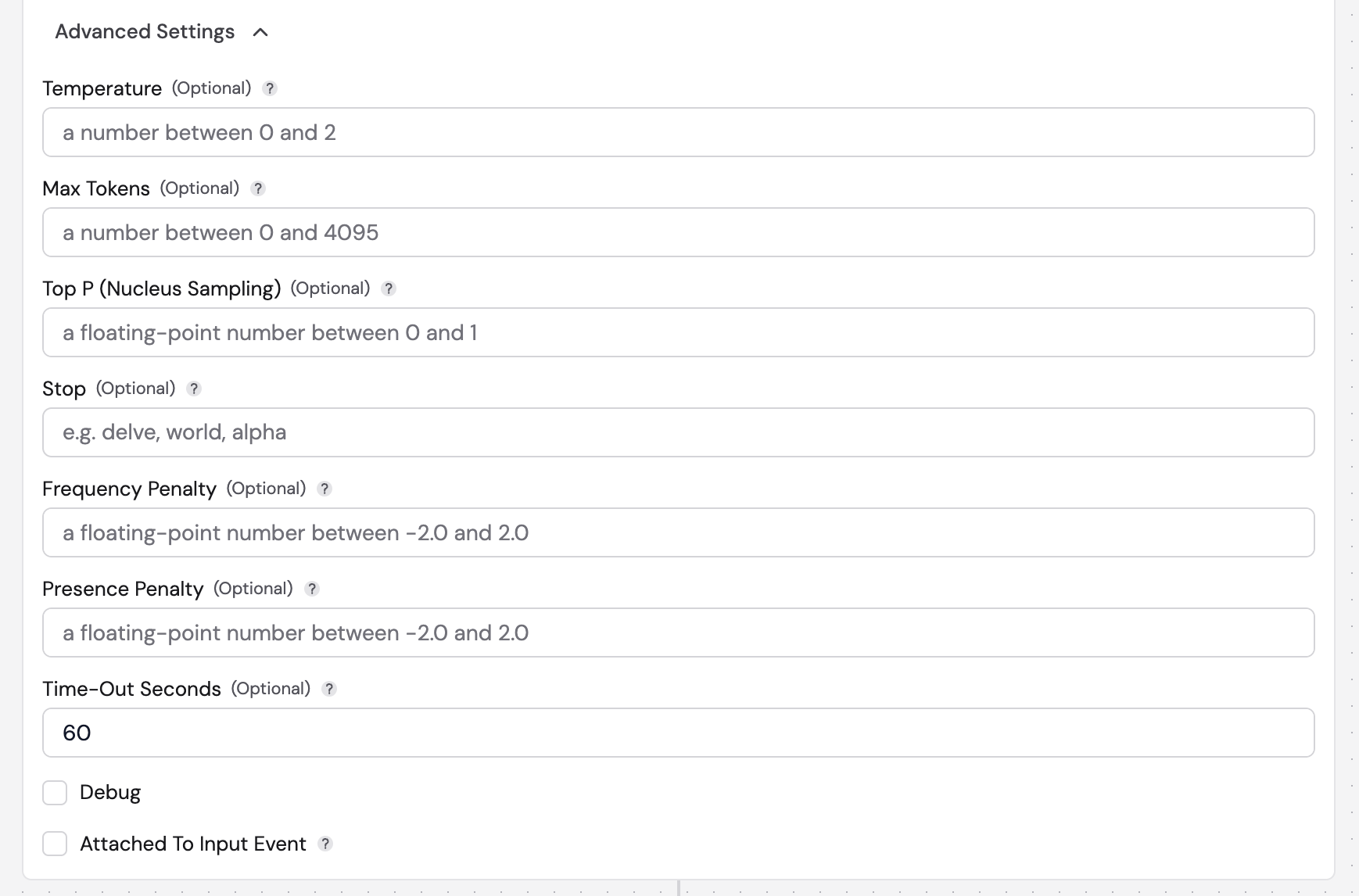

LLM Advanced Settings

The Advanced Settings in the LLM Node allow users to customize and optimize the language model's behavior for various text generation tasks. These settings provide fine-grained control over output generation, enabling tailored interactions that fit specific use cases in workflows.

Parameters

- Temperature: Controls the randomness of the model's output. A lower temperature (e.g., 0) produces deterministic

results, while a higher temperature (up to 2) encourages more creative and varied responses.

- Valid Range: A number between 0 and 2.

- Max Tokens: Specifies the maximum number of tokens that can be generated in the response, limiting the output

length to meet specific requirements.

- Valid Range: A number between 0 and 4095.

- Top P (Nucleus Sampling): This parameter defines the cumulative probability of token choices. By considering only

the tokens that contribute to the top 'p' probability mass, it enhances output diversity.

- Valid Range: A floating-point number between 0 and 1.

- Stop: Enables the specification of sequences that, when encountered, signal the model to stop generating further

tokens. This feature helps create concise and focused outputs.

- Example Values:

delve,world,alpha.

- Example Values:

- Frequency Penalty: Adjusts the likelihood of previously generated tokens being reused. A higher frequency penalty

reduces repetitive output, promoting variety in responses.

- Valid Range: A floating-point number between -2.0 and 2.0.

- Presence Penalty: Influences the introduction of new tokens based on previous generation. Increasing this value

decreases the chance of repeated token generation, fostering novel contributions.

- Valid Range: A floating-point number between -2.0 and 2.0.

- Time-Out Seconds: Sets a maximum allowable time for the model to generate a response, ensuring timely interactions

and avoiding delays in workflows.

- Default Value: 60 seconds.

- Debug: A checkbox option that, when checked, enables debug mode to provide additional information for troubleshooting and optimization of the output process.

- Attached To Input Event: A checkbox that specifies whether this node is linked to an input event. This setting influences how the node processes incoming data and generates responses.

Usage Tips

- Experiment with the Temperature settings to find the right balance between creativity and predictability based on specific needs.

- Set clear Stop sequences to avoid overly lengthy outputs and maintain focus in generated text.

- Use a combination of Frequency and Presence Penalties to fine-tune repetition levels in the output.

- Choosing the right settings in the Advanced Options will significantly affect the quality and relevance of the model's responses.

- Regularly review and adjust parameters to align with the evolving requirements of your applications.

Function Calling

Function Calling in the LLM Node facilitates the integration of custom functions to enhance the capabilities of the language model. This feature allows users to extend the functionality of the model by invoking external functions in response to specific triggers or conditions, enabling a wide range of applications from data retrieval to complex processing tasks.

How to Add a Function

To add a function into Fleak, follow these steps:

- Navigate to the Functions Tab: On the left side of the Fleak interface, locate and click on the Functions tab.

- Click on 'New Function': In the top right corner, click the New Function button to create a new function.

- Fill in Function Details: A dialog box will appear for adding the new function. Complete the following information:

-

Type: The main option is AWS Lambda, but we support other cloud functions.

-

AWS Lambda Function Name: Enter the name of your Lambda function (e.g.,

get_stock_price). This name will identify your function within the system. -

AWS Region: Choose the relevant AWS region where your Lambda function is hosted.

-

AWS Connection: Select an existing connection or create a new one to link your function to the appropriate resources.

-

Define Function Call: In the Function call definition field, enter the definition for your function in JSON format.

- Example:

{

"name": "get_stock_price",

"description": "Get the current stock price",

"strict": true,

"parameters": {

"type": "object",

"properties": {

"symbol": {

"type": "string",

"description": "The stock symbol"

}

}

}

}

- Create the Function: Once all fields are complete, click the Create button to finalize the addition of your function.

After adding a function, you can reference it in the Function Calling section of the LLM Node to trigger the function based on the model's output or other conditions

Considerations

- Ensure that the function's name is unique to avoid conflicts with other existing functions.

- Review the AWS permissions and configurations associated with the function to guarantee smooth operation once invoked through the LLM Node.

- Test the function thoroughly to confirm it performs as expected before deploying it in production workflows.

Structured Output

Structured outputs in Large Language Models (LLMs) are critical for creating predictable, machine-readable responses. This format is beneficial for applications that require consistent data formatting for easy parsing and integration with other systems.

Fleak's implementation relies on OpenAI's structured output capabilities. For more details, refer to OpenAI's documentation on structured outputs to learn more about how JSON Schema is used with GPT models.

Example: Configuring a Math Problem Workflow in Fleak

This example illustrates how to set up structured output to guide users through solving a math problem step-by-step

Step 1: Create a new Workflow

Inside your Fleak account, create a new empty workflow.

Step 2: Enter data into the HTTP Data Input node

Copy and paste the sample data below into the HTTP Data Input:

{

"content": "how can I solve 8x + 7 = -23"

}

Step 3: Add and Configure the Large Language Model (LLM) node

-

Choose a model: You can choose any OpenAI model - for this example we will be using gpt-4o

-

Configure the System’s Prompt: System is the role used for instructions. Make sure that role is selected and then copy and paste the prompt down below:

You are a helpful math tutor. Guide the user through the solution step by step.

- Configure the User’s Prompt: Set up the user input with dynamic placeholders to insert values from the input data:

{{$.content}}

- Enable Structured Output: Toggle on Enable Structured Output. Use the following JSON Schema to specify the format of the response:

{

"type": "json_schema",

"json_schema": {

"name": "math_response",

"schema": {

"type": "object",

"properties": {

"steps": {

"type": "array",

"items": {

"type": "object",

"properties": {

"explanation": {

"type": "string"

},

"output": {

"type": "string"

}

},

"required": [

"explanation",

"output"

],

"additionalProperties": false

}

},

"final_answer": {

"type": "string"

}

},

"required": [

"steps",

"final_answer"

],

"additionalProperties": false

},

"strict": true

}

}

- Run the Large Language Model Node: On the top right of the Large Language Model node, click “Run”. The response will be formatted according to the specified JSON Schema.

Step 4: Post-processing with SQL

To clean up and format the output data, use a SQL node:

-

Add a SQL node under the LLM node.

-

Copy and paste the following SQL code:

SELECT

(choices -> 0 -> 'message' -> 'content')::json as answer_output

FROM

events;

- Run the SQL node to format the results as defined by the JSON Schema in the structured output.