Databricks Integration

Databricks is a unified analytics platform that provides collaborative data engineering and data science capabilities. The Databricks integration in Fleak allows users to connect to their Databricks workspace and write processed data from workflows into Unity Catalog tables using the Databricks Sink node.

Setting Up Databricks Integration

Navigate to Integrations

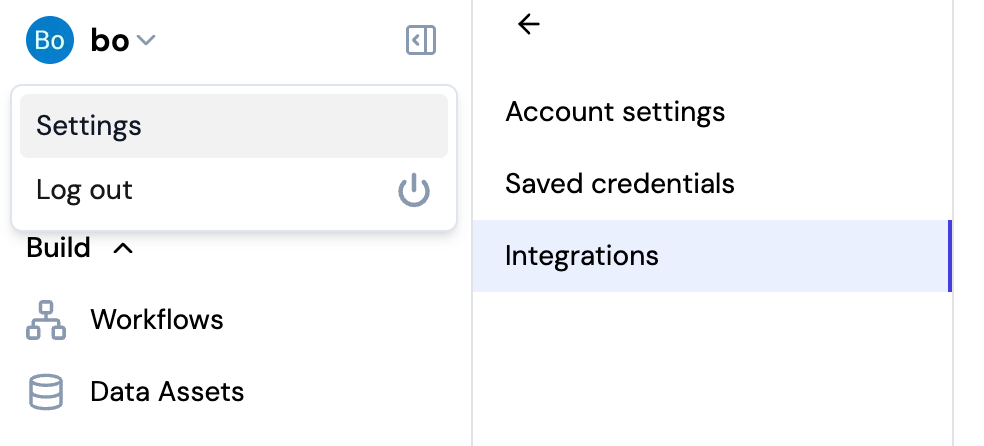

- Click on your username in the top left corner of the Fleak dashboard

- Select Settings from the dropdown menu

- Click on Integrations in the settings sidebar

Add Databricks Integration

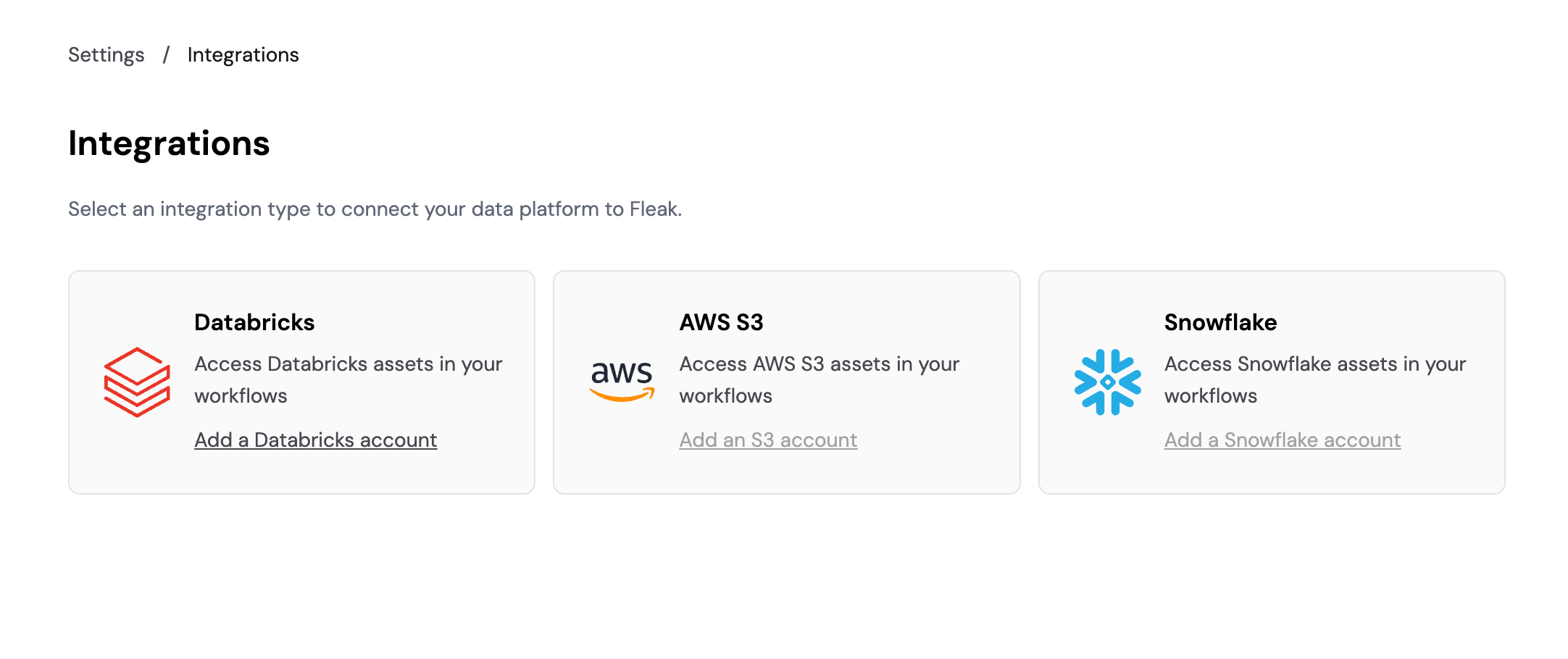

On the Integrations page, you'll see available integration types. Click Add a Databricks account to create a new Databricks integration.

Configure Connection Details

Fill in the following connection details:

Connection

- Connection Name: Give your connection a descriptive name (e.g., "My Databricks Connection")

- Host URL: Your Databricks workspace URL (e.g.,

https://xxx.cloud.databricks.com) - OAuth Secret: Enter your Databricks M2M Oauth Secret

Client IDandSecret

Click Connect & Load Catalogs to validate your credentials and load available catalogs.

Connection Scope

After connecting, configure the scope for this integration:

- Catalog: Select the Unity Catalog to use

- Schema: Select the schema within the chosen catalog

- Staging Volume (optional): Select a volume for staging file uploads. Pipelines writing to this schema need a staging area for file uploads.

The Preview Data Assets section shows the tables available in the selected catalog and schema.

Click Create to save the integration.

Using Databricks in Workflows

Once connected, Fleak will be able to see all the tables in Unity Catalog. You can use the Databricks Sink node in your workflows to write data into those tables.

The service principal credentials must have appropriate permissions to read catalog metadata and write to the target tables.

For more information about configuring the Databricks Sink Node, please visit the Databricks Sink Node reference page.